Guidance and resources

Monitoring and Evaluation Framework

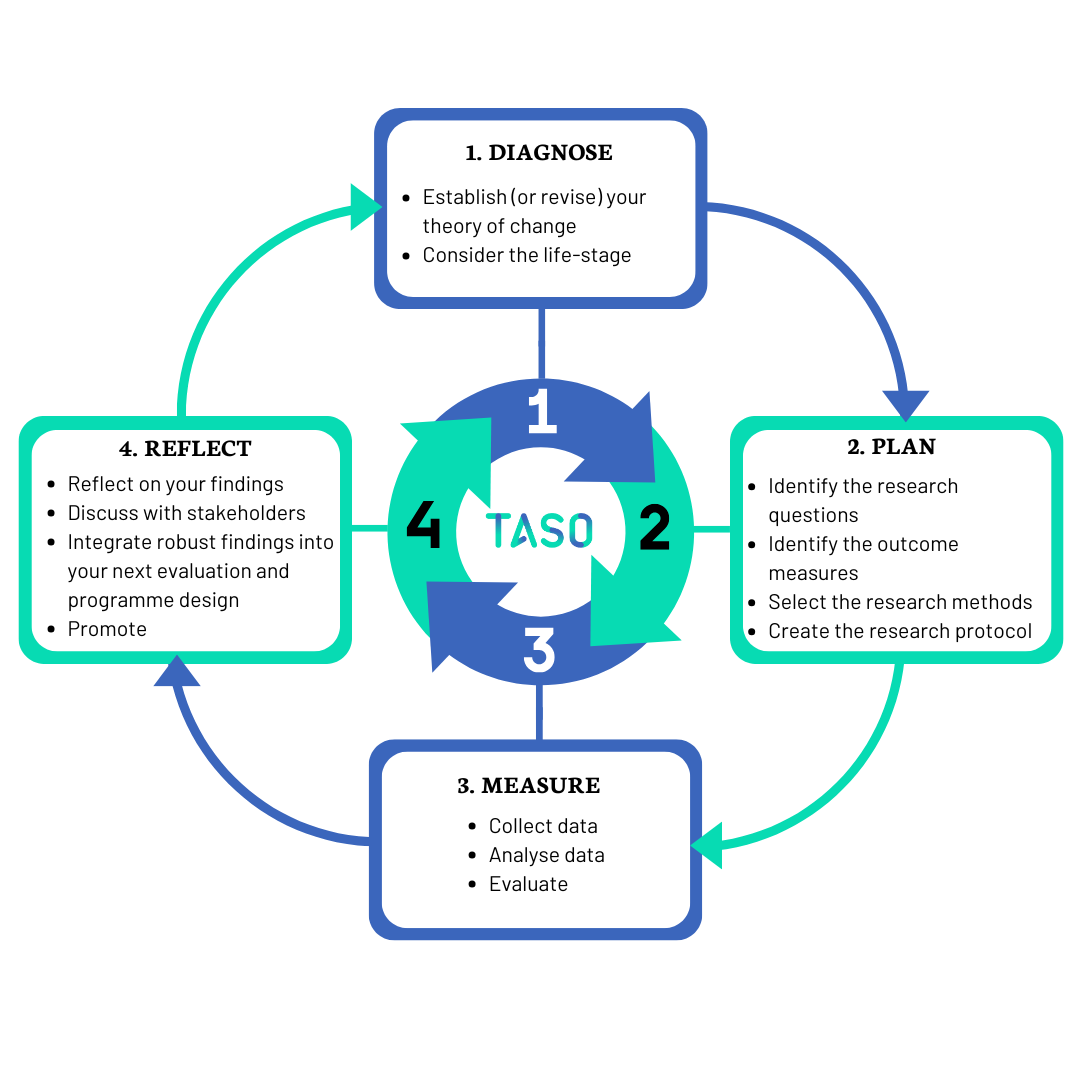

The MEF contains four main steps to consider when planning your evaluation; from establishing your theory of change in ‘Step 1: Diagnose’ to reporting on your findings in ‘Step 4: Reflect’.

The evaluation process is iterative. Your findings in each step help support continuous improvement. For example, the findings from ‘Step 3: Measure’ should feed into the refinement of your theory of change, research questions and methods in ‘Step 4: Reflect’.

The framework covers pre-16 outreach through to initiatives to address gaps in graduate outcomes. It is not intended to replace in-house evaluation support.

The sections below will guide you through the MEF step-by-step.