2. Plan

Identifying Research Questions

These overarching questions will determine the scope and approach of your evaluation.

The first research question should be about the causal impact of the intervention:

“Did [intervention] increase [outcome] among [group]?”

For example:

- Did peer mentoring reduce stress among nursing students?

- Did the online Cognitive Behavioural Therapy programme reduce depression among students?

You may also wish to have research questions relating to other effects of the intervention, or about the way it was implemented and experienced by recipients such as:

- Was the initiative delivered the way we expected?

- Are we targeting the right students?

- What was the cost-effectiveness of the initiative?

To help formulate your questions, you should also consider:

- Who will use the findings and how?

- What do stakeholders need to learn from the evaluation?

- What questions will you be able to answer and when?

Identifying Outcome Measures

Once you have established your research questions, you will need to consider which outcome measures best enable you to answer them and demonstrate success. The measures should link closely with the process, outcomes and impact you have recorded in your Theory of Change. A simple way to think about which measures to select is:

“I’ll know [outcome reached] when I see [indicator]”

Evaluation guidance on outcome measures in a non-clinical context

Tailored guidance on selecting outcome measures for the evaluation of interventions designed to improve mental health is below.

This guidance focuses on measures that are practical and suitable for use in the non-clinical space.

Guidance for evaluating initiatives within a non-clinical context

It is likely that evaluations will also embed outcomes relating to how students are doing on their course, as well as their mental health. TASO’s Common Outcome Measures table sets out common outcome indicators for initiatives at each stage in the student life-cycle, from Key Stage 3 through to post‑graduation. The framework supports the identification of outcome measures.

In some cases, evaluations of interventions designed to improve student mental health may also incorporate outcomes which are more typically used in widening participation, for example sense of belonging. TASO has developed and validated a widening participation questionnaire – the Access and Success Questionnaire (ASQ) – which provides a set of validated scales that can be used to measure the key intermediate outcomes these activities aim to improve.

If it is necessary to develop new indicators outside those outlined above, it’s worth considering the below hierarchy of measures based on their reliability and validity:

0. Output only,

1. Self-report subjective (e.g. perceived knowledge),

2. Self-report objective (e.g. actual knowledge),

3. Validated scales (e.g. from academic research, externally-administered tests),

4. Interim or proxy outcome (e.g. GCSE selections, sign-ups to events), or

5. Core impact (e.g. A level attainment, university acceptances, continuation).

Generally, we should aim to be focusing evaluations on measures at the higher end of this scale (i.e. 3 and above).

Selecting a Research Method

Impact evaluation

There are many different methods that can be used to try and understand both whether your initiative is having an impact, and how it’s operating in practice. In this section, we focus on the primary research method – that is, the research method being used to investigate your primary research question, which will enable you to measure the causal impact of your initiative on an outcome.

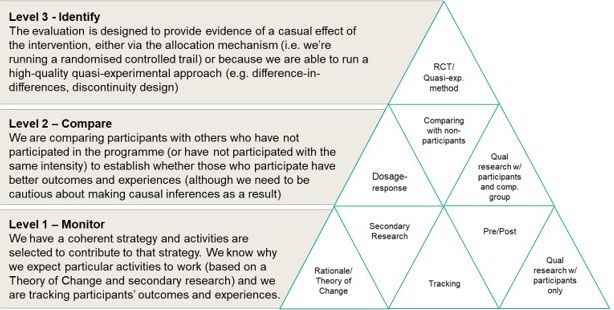

Overall, some research methods are better suited to this question than others. Following the OfS’ Standards of Evidence, we conceptualise three levels of impact evaluation:

- Monitoring,

- Comparing and;

- Identifying.

Over time, we would encourage all programmes across the sector to move towards having Level 2 or, where feasible, Level 3 impact evaluations. However, this process may occur over a number of years, especially for new or complex initiatives.

The diagram below summarises some of the key research methods at each level. You can also download the research methods at each level.

Process evaluation

At this stage you should also consider the best way to collect data about the way the initiative worked, whether everything went to plan, and how it felt to participants and partners. The methodology guidelines also contain an overview of common process evaluation methods.

Key considerations for evaluating interventions designed to improve student mental health

- Choose a method which allows you to really understand impact.

Randomised controlled trials (RCTs) are one of the most robust ways to test interventions as they allow comparison of two groups that have either received or haven’t received the intervention, whilst taking into account observable and unobservable differences between the two groups. There are many examples in the Toolkit of studies using a wait-list control design; the key benefit of this design is that the control group is still able to receive the intervention, just at a later date once outcomes have been measured in both groups. TASO’s guidance on evaluating complex intervention using RCTs may be particularly useful.

2. Choose the right outcomes.

Outcomes should be measured using validated scales before and after the intervention has been received. As we are often lacking evidence on the longer-term effects of interventions, measuring outcomes at multiple time points (e.g. three-, six- and 12-month follow-ups) is important, rather than only immediately after. We also have a lack of evidence on the impact of interventions on student outcomes such as attainment, retention and progression and provider’s should seek to embed these into evaluation plans.

3. Make sure you have a big enough sample.

A common weakness of existing studies is insufficient sample sizes, making it hard to conduct robust quantitative analysis; this is a particular challenge when working with specific cohorts of students which may be limited in size. Effective evaluations may cover interventions running across multiple programmes or include inter-institutional collaboration to address this issue.

4. Consider who is in your sample.

Much of the existing research on student mental health interventions is undertaken with self-selecting groups of predominantly white, female undergraduates, often on Social Science courses. Consider if your evaluation design is going to give you a sample which means that the results of your analysis are generalisable to the populations you intended.

Creating a Research Protocol

A Research Protocol is a written document that describes the overall approach that will be used throughout your intervention, including its evaluation.

A Research Protocol is important because it:

- Lays out a cohesive approach to your planning, implementation and evaluation

- Documents your processes and helps create a shared understanding of aims and results

- Helps anticipate and mitigate potential challenges

- Forms a basis for the management of the project and the assessment of its overall success

- Documents the practicalities of implementation

Reasons for creating a Research Protocol include:

- Setting out what you are going to do in advance is an opportunity to flush out any challenges and barriers before going into the field.

- Writing a detailed protocol allows others to replicate your intervention and evaluation methodology, which is an important aspect of contributing to the broader research community.

- Setting out your rationale and expectations for the research, and your analysis plan, before doing the research gives your results additional credibility.

The protocol should be written as if it’s going to end up in the hands of someone who knows very little about your organisation, the reason for the research, or the intervention. This is to future-proof the protocol, but also to ensure that you document all your thinking and the decisions you have made along the way.

Research protocol examples developed by HE providers seeking to evaluate student mental health interventions are given below.

Research protocol examples

To develop your own protocol please use the template support below:

Further guidance

The following video is a recording of a webinar TASO held on 17 June 2020 on the second step in its evaluation guidance – Step 2: Plan.

The session covers how to:

- Formulate evaluation questions

- Identify outcome measures

- Choose a research method