Improving access and widening participation interventions in higher education are designed to produce a range of outcomes, and effective evaluation of such activities has become increasingly important.

Many interventions are not compatible with conventional forms of causal inference, such as systematic review, randomised controlled trial and quasi-experimental design, due to their complex nature, resource issues, emergent or developmental features, or small scale.

These resources focus on some of these alternatives, specifically designed for impact evaluation that can be used with small cohorts: so-called ‘small n’ impact evaluations.

Impact evaluation is important but can be challenging. TASO promotes the use of rigorous experimental and quasi-experimental impact evaluation methodologies as these are often the best way to determine causal inference. However, sometimes these types of impact evaluation raise challenges:

- They are effective in describing a causal link between an intervention and an outcome, but less good at explaining the mechanisms that cause the impact or the conditions under which an impact will occur.

- They require a reasonably large number of cases that can be divided into two or more groups. Cases may be individual students or groups that contain individuals, such as classrooms, schools or neighbourhoods.

- Most types of experiment – and some types of quasi-experiment – require evaluators to be able to change or influence (manipulate) the programme or intervention that is being evaluated. However, this can be difficult or even impossible, perhaps because a programme or intervention is already being delivered and the participants already confirmed or because there are concerns about evaluators influencing eligibility criteria.

- Experimental and quasi-experimental evaluation methodologies can sometimes struggle to account for the complexity of programmes implemented within multifaceted systems where the relationship between the programme/intervention and outcome is not straightforward.

An alternative group of impact evaluation methodologies, sometimes referred to as ‘small n’ impact methodologies, can address some of these challenges:

- They only need a small number of cases or even a single case. The case is understood to be a complex entity in which multiple causes interact. Cases could be individual students or groups of people, such as a class or a school. This can be helpful when a programme or intervention is designed for a small cohort or is being piloted with a small cohort.

- They can ‘unpick’ relationships between causal factors that act together to produce outcomes. In small n methodologies, multiple causes are recognised and the focus of the impact evaluation switches from simple attribution to understanding the contribution of an intervention to a particular outcome. This can be helpful when services are implemented within complex systems.

- They can work with emergent interventions where experimentation and adaptation are ongoing. Generally, experiments and quasi-experiments require a programme or intervention to be fixed before an impact evaluation can be performed. Small n methodologies can, in some instances, be deployed in interventions that are still changing and developing.

- They can sometimes be applied retrospectively. Most experiments and some quasi-experiments need to be implemented at the start of the programme or intervention. Some small n methodologies can be used retrospectively on programmes or interventions that have finished.

Webinar

Watch our webinar which provides an introduction to the guidance.

Further guidance

What is evaluation?

What is evaluation?

Evaluation is a broad concept that can be difficult to distinguish both from other types of research and related practices such as monitoring and performance management. There is no single, widely accepted definition of evaluation. The Magenta Book, the UK government’s guidance on evaluation, defines evaluation as:

“A systematic assessment of the design, implementation and outcomes of an intervention. It involves understanding how an intervention is being, or has been, implemented and what effects it has, for whom and why. It identifies what can be improved and estimates its overall impacts and cost-effectiveness.”

The Magneta Book

Definitions of evaluation often emphasise that evaluations make judgements while also maintaining a level of objectivity or impartiality. This helps distinguish evaluation from other types of research. So, for example, Mark et al. (2006), in the introduction to the Sage Handbook of Evaluation, define evaluation as:

“A social and politicized practice that nonetheless aspires to some position of impartiality or fairness, so that evaluation can contribute meaningfully to the well-being of people in that specific context and beyond.”

Sage Handbook of Evaluation

Some definitions of evaluation also emphasise that it uses research methods, and this is helpful in distinguishing evaluation from similar practices such as monitoring and performance management. For example, Rossi and colleagues in Evaluation: A Systematic Approach define evaluation as:

“The application of social research methods to systematically investigate the effectiveness of social intervention programs in ways that are adapted to their political and organizational environments and are designed to inform social action to improve social conditions.”

Evaluation: A Systematic Approach

Fox and Morris (2020) in the Blackwell Encyclopaedia of Sociology combine these different elements and define evaluation as:

“The application of research methods in order to make judgments about policies, programs, or interventions with the aim of either determining or improving their effectiveness, and/or informing decisions about their future.”

Blackwell Encyclopaedia of Sociology

Evaluation takes various forms, and distinctions can be made according to the aim of the evaluation. Impact evaluations are concerned with establishing the existence or otherwise of a causal connection between the programme or intervention being evaluated and its outcomes. The most common type of impact evaluation involves comparing the average outcome for an intervention group and a control group. Sometimes, cases (for example students) are assigned randomly to intervention and control groups: this is known as a randomised controlled trial. This guide is about a different approach to impact evaluation that involves only one or a small number of cases and does not involve a control group. Causality can still be inferred but cannot generally be quantified.

Why is impact evaluation important?

- Impact evaluations help decide whether a programme or scheme should be adopted, continued or modified for improvement. They help institutions to understand what works and to make better decisions on what to invest in and when to disinvest.

- As outlined by the Office for Students (OfS), all evaluations funded or co-funded by Access and Participation Plans should contain some element of impact evaluation. This is important in demonstrating that initiatives have the desired impact on student outcomes

- Impact evaluation is particularly important when designing and implementing an innovative programme or service to ensure that it has the intended effect and does not lead to unintended negative outcomes.

What is causation?

What is causation?

Causal inference is key to impact evaluation. An impact evaluation should allow the evaluator to judge whether the intervention being evaluated caused the outcome being measured. Causation differs from correlation. If you are unsure of this difference, please watch this TASO causality webinar.

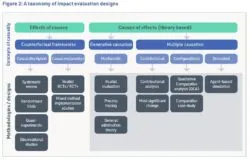

Different approaches to impact evaluation can invoke different understandings of causal inference. There is a fundamental distinction between the two types of question that social scientists ask when using the tools and techniques of social science in evaluation. First, they may ask the question: what are the effects of a causal factor (i.e. an intervention or treatment)? Second, and in contrast, they may ask the question: what are the causal factors that give rise to an effect? These questions are what Dawid (2007) calls ‘effects of causes’ and ‘causes of effects’ type questions (Figure 1).

A classic ‘effects of causes’ approach to impact evaluation would be a randomised controlled trial (RCT) design. This is what the OfS standards of evidence refer to as a ‘Type 3’ evaluation. Randomised experiments are used widely in evaluation and are designed to establish whether there is causality between an intervention and the outcomes. In its simplest form, a randomised experiment involves the random allocation of subjects to intervention and control groups. Subsequent differences in the average outcomes observed in these two groups are understood to provide an unbiased estimate of the causal effect of the intervention. The design draws on the tradition of controlled experiments in medicine, psychology and agriculture but has been developed to evaluate social programs. Where randomised experiments are not possible, several ‘quasi-experimental’ designs have been developed that replicate the experimental logic (Shadish, Cook and Campbell, 2002).

In a ‘causes of effects’ approach to impact evaluation, the intervention is understood as producing effects through mechanisms which, as Cartwright and Hardie (2012) describe, act as just one ingredient in a ‘causal cake’. The various relevant ingredients need to be identified, defined and explored before the causal question can be fully addressed, and causation or otherwise inferred. Here the emphasis is on an explanation of the effects or outcomes that are altered through the triggering of causal mechanisms. Specifically, the evaluation is concerned with the role of the intervention in these processes. In other words, causes work together in causal packages to produce effects (Cartwright & Hardie, 2012). The ‘small n’ approaches to evaluation described here are based on the ‘causes of effects’ approach to impact evaluation.

What is small n evaluation?

What is small n evaluation?

Small n impact evaluations are evaluation designs that involve only a small number of cases, insufficient to construct a ‘traditional’ counterfactual impact evaluation in which statistical tests of the difference between an intervention group and a control group are used to estimate impact. Instead of a counterfactual design, mid-level theory – explaining why the intervention will have an impact – together with alternative causal hypotheses are used to establish causation beyond reasonable doubt.

Small n impact evaluation designs should be considered when one or more of the following are true:

- There is one case or a small number of cases. A case could be a person, an organisation, a school or a classroom.

- There is no option to create a counterfactual or control group.

- There is considerable heterogeneity in the population receiving the intervention, the wider context of the intervention or the intervention itself. Such heterogeneity would make it impossible to estimate the average treatment effect using a traditional counterfactual evaluation, as different sub-groups will be too small for statistical analysis (White and Phillips 2012).

- There is substantial complexity in the programme being evaluated, meaning that an evaluation designed to answer the question ‘does the programme cause outcome X’ may make little sense, whereas an evaluation design that recognises that the programme is just one ingredient in a ‘causal cake’ may make more sense.

In these situations, a small n impact evaluation still offers the possibility of making causal statements about the relationship between an intervention and an outcome.

Important limitations of small n methods include:

- Many of these methods do not allow evaluators to quantify the size of an impact. This can also reduce options for subsequent economic evaluation.

- These methods all involve detailed information gathering at the level of the case; in some small n impact evaluations, gathering in-depth qualitative data from one or more cases could be just as time-consuming and resource-intensive as data collection in a traditional, counterfactual impact evaluation.

- All evaluation requires that evaluators have the necessary skills in evaluation design, data collection, analysis and report writing. However, in a small n impact evaluation, the evaluator additionally needs a deeper knowledge of the programme and the context within which it is being implemented than might typically be required in a traditional, counterfactual impact evaluation.

How do small n impact evaluations work?

How do small n impact evaluations work?

Small n impact evaluations draw on different understandings of causation from ‘traditional’ counterfactual impact evaluations.

All the small n methodologies that we describe have certain elements in common:

- Mid-level theory: All the approaches to impact evaluation associated with uncovering ‘causes of effects’ involve specifying a mid-level theory or a Theory of Change together with alternative causal hypotheses. Causation is then established beyond reasonable doubt by collecting evidence to validate, invalidate or revise hypothesised explanations (White and Phillips 2012). Mid-level, or middle range, theory is a sociological concept that attempts to combine high-level abstract concepts with concrete empirical examples. As such, it makes hypothesis development possible by bringing data and evidence together with theoretical constructs to help make sense of the subject under investigation. Mid-level theories can also support a focus on causal mechanisms operating at a more general level than the context of specific individual interventions. As a result, they encourage the identification of patterns and ‘regularities’ across a range of interventions or contexts. This process can support the development of generalisable conclusions and, therefore, the transfer of hypotheses between related interventions or contexts.

- Cases: Key to all small n approaches is the concept of the ‘case’. However, small n methodologies are not ‘case studies’. Small n, case-based methodologies are varied but Befani and Stedman-Bryce (2017) suggest that case-based methods can be broadly typologised as either between-case comparisons (for example, qualitative comparative analysis) or within-case analysis (for example, process tracing).

- Mixed method: Generally, quantitative and qualitative data are used, with no sharp distinction made between quantitative and qualitative methods.

- Complexity: In counterfactual approaches, the two concepts of bias and precision are central to managing uncertainty. Sources of uncertainty are controlled through both research design (randomisation where possible and sample size/statistical power) and precision (e.g. methods of statistical inference, computation of confidence intervals, p-values, effect sizes and/or Bayes factors). In contrast, small n approaches are case-based, and the multifaceted exploration of complex issues in real-life settings allows these approaches to account for uncertainty through invoking concepts centred on ideas of complexity.

- Generative and multiple causation: Within approaches to small n impact evaluation, Stern and colleagues (2012) make a broad distinction between approaches based on generative causation and those based on multiple causation. Multiple causation depends on combinations of causes that lead to an effect, whereas generative causation understands causation as the transformative potential of a phenomenon and is closely associated with identifying mechanisms that explain effects (Pawson and Tilley 1994).

Getting started with a small n evaluation

Getting started with a small n evaluation

Before starting any impact evaluation, consider the key issues concerning resources and constraints and only start an evaluation if these questions can be answered satisfactorily.

- Does the institution have sufficient resources (e.g. direct or in-kind funding) to conduct an evaluation? Issues to consider may include the burden associated with data collection for both students and practitioners, ethics, likely response rates, level of engagement and the possible impact that data collection may have on the programme and student outcomes.

- When is the evaluation report needed and can the evaluation realistically deliver within this timescale? In the context of higher education, the academic calendar (i.e. holidays) should be considered alongside the nature of the data collection required and the timeframe of the evaluation.

- Do the institution’s staff have the necessary expertise (i.e. knowledge and skills) and capacity (i.e. time and resources)?

Small n impact evaluation designs should be considered when one or more of the following are true:

- There is one case or a small number of cases. (A case could be an organisation as well as a person).

- There is no option to create a counterfactual or control group.

- There is considerable heterogeneity in the population receiving the intervention, the wider context of the intervention or the intervention itself. Such heterogeneity would make it impossible to estimate the average treatment effect using a traditional counterfactual evaluation as the different sub-groups will be too small for statistical analysis (White and Phillips 2012).

- There is substantial complexity in the programme being evaluated, meaning that an evaluation designed to answer the question ‘does the programme cause outcome X’ may make little sense, whereas an evaluation design that recognises that the programme being evaluated is just one ingredient in a ‘causal cake’ may make more sense.

Befani (2020) has developed a tool to choose appropriate impact evaluation methodologies. It covers a wide range of ‘small n’ impact methods as well as ‘traditional’ counterfactual evaluation designs. We strongly recommend that you familiarise yourself with this tool and use it as an aid to decide which small n impact evaluation methodology to use.

For all the small n methodologies described here, an important starting point is to develop a detailed Theory of Change.

Theory of change

Theory of change

Overview

Theory of change is not a small n impact evaluation; rather, it is a precursor to undertaking most small n impact evaluations.

A useful theory of change must set out clearly the causal mechanisms by which the intervention is expected to achieve its outcomes (HM Treasury 2020). The Magenta Book (HM Treasury 2020) details how more sophisticated theory of change exercises produce a detailed and rigorous assessment of the intervention and its underlying assumptions, including the precise causal mechanisms that lead from one step to the next, alternative mechanisms to the same outcomes, the assumptions behind each causal step, the evidence that supports these assumptions, and how different contextual, behavioural and organisational factors may affect how, or whether, outcomes occur.

What is involved?

There is no set method for developing a theory of change. The process often starts with articulating the desired (long-term) change that a programme or intervention intends to achieve, based on a number of assumptions that hypothesise, project or calculate how change can be enabled. The following questions, based closely on the Early Intervention Foundation’s 10 Steps for Evaluation Success, can be used to structure the process of building a theory of change:

- What is the intervention’s primary intended outcome?

- Why is the primary outcome important and what short and long-term outcomes map to it?

- Who is the intervention for?

- Why is the intervention necessary?

- Why will the intervention add value?

- What outputs are needed to deliver the short-term outcomes?

- What will the intervention do?

- What inputs are required?

To be a useful starting point for a small n impact evaluation, the theory of change must set out clearly the causal mechanisms by which the intervention is expected to achieve its outcomes. An understanding of the causal mechanisms will develop from both engagement with key informants and an understanding of the scientific evidence base.

The development of a theory of change is fundamentally participatory. Evaluators should include a variety of stakeholders and, therefore, perceptions. The process of developing a theory of change should be based on a range of rigorous evidence, including local knowledge and experience, past programming material and social science theory. It is common to use workshops as part of the process, but document reviews, evidence reviews and one-to-one interviews with key informants are also likely to feature.

Case study: Theory of change (PDF)

Briefing: Theory of change (PDF)

Useful resources

Many organisations have produced guidance on the theory of change.

TASO has produced guidance on developing a theory of change as part of their wider evaluation guidance. This is supported by videos discussing theory of change and how to run a theory of change workshop.

Many other organisations have also produced guides. A few of these include:

Asmussen, K., Brims, L. and McBride, T. (2019) 10 steps for evaluation success, London: Early Intervention Foundation. See pp. 15–26.

Noble, J. (2019) Theory of change in ten steps, London: New Philanthropy Capital.

Rogers, P. (2014). Theory of Change, Methodological Briefs: Impact Evaluation 2, UNICEF Office of Research, Florence.

A helpful worked example of how to build a Theory of Change produced by ActKnowledge and the Aspen Institute Roundtable on Community Change is available on Centre for Theory of Change.

Realist evaluation

Realist evaluation

Overview

A realist evaluation is a theory-led approach to evaluation that seeks to understand what works for whom, in what circumstances, and in what respects an intervention is more likely to succeed.

Pawson and Tilley’s (1997) starting point for setting out the realist approach to evaluation is to argue that the ‘traditional’ experimental evaluation is flawed because any attempt to reduce an intervention to a set of variables and control for difference by using an intervention and control group strips out context. They assume a different, ‘realist’ model of explanation in which ‘causal outcomes follow from mechanisms acting in contexts’ (Pawson and Tilley 1997, p. 58). Context-Mechanism-Outcome configurations (CMOs) are thus key to impact evaluation.

A mechanism explains what it is about a programme that makes it work. Mechanisms are not variables but accounts that cover individual agency and social structures. They are mid-level theories that spell out the potential of human resources and reasoning (Pawson and Tilley 1997).

Causal mechanisms and their effects are not fixed but are contingent on context. A programme will only be effective if the contextual conditions surrounding it are conducive (Pawson and Tilley 1994).

What is involved?

There is still much debate about exactly how to undertake a realist evaluation; however, it is possible to set out some key elements of any realist evaluation.

The starting point is mid-level theory building; ‘empirical work in programme evaluation can only be as good as the theory which underpins it’ (Pawson and Tilley 1997, p. 83).

Identifying programme mechanisms is key. Wong and colleagues (2013b) suggest that one way to identify a programme mechanism is to reconstruct, in the imagination, the reasoning of participants or stakeholders. They also note that mechanisms cannot be seen or measured directly (because they happen in people’s heads or at different levels of reality from the one being observed). There will potentially be many mechanisms and the role of the realist researcher is to identify the ‘main mechanisms’. The ‘causes’ of outcomes are not simple, linear or deterministic. This is partly because programmes often work through multiple mechanisms and partly because a mechanism is not inherent to the intervention, but is a function of the participants and the context.

Mechanisms are context‐sensitive and the evaluation must develop an ‘understanding of how a particular context acts on a specific program mechanism to produce outcomes – how it modifies the effectiveness of an intervention’ (Wong et al. 2013b, p. 9). Pulling these elements together, the scientific realist evaluator always constructs their explanation around the three vital ingredients of context, mechanism and outcome, which Pawson and Tilley refer to as context-mechanism-outcome configurations.

Although both quantitative and qualitative data are used in realist evaluation, there is generally more emphasis on the iterative gathering of qualitative data that allows for theory to be developed and explored.

The standard realist data matrix would make comparisons of variations in outcome patterns across groups, but those groups would not be experimental and control groups. Instead, they would be defined by CMO configurations, with the evaluator running a systematic range of comparisons across a series of studies to understand which combination of context and mechanism is most effective (Pawson and Tilley 1994).

University Centre Leeds realist evaluation case study (PDF)

Realist evaluation briefing (PDF)

Useful resources

The RAMESES II project, funded by the NIHR, developed quality and reporting standards and resources and training materials for realist evaluation. These are available online via The RAMESES Projects.

There is a Supplementary Guide on realist evaluation (PDF), issued as part of the Magenta Book 2020.

Pawson and Tilley’s influential and widely cited book on scientific realist evaluation is a good starting point for exploring scientific realism:

Pawson, R. and Tilley, N. (1997) Realistic evaluation. London: Sage.

Process tracing

Process tracing

Overview

Process tracing can be defined as:

“The analysis of evidence on processes, sequences, and conjunctures of events within a case for the purposes of either developing or testing hypotheses about causal mechanisms that might causally explain the case.” Bennet and Checkel 2015, p. 7

It is a methodology that combines pre-existing generalisations with specific observations from within a single case to make causal inferences about that case (Mahoney 2012). It involves the examination of ‘diagnostic’ pieces of evidence within a case to support or overturn alternative explanatory hypotheses; the identification of sequences and causal mechanisms is central (Bennett 2010).

What is involved?

Ricks and Liu (2018) set out the series of steps involved in process tracing:

1. Identify hypotheses: The evaluator draws on broader generalisations and evidence from within the case to generate a series of (preferably competing) testable hypotheses about how an intervention may connect to an outcome. A Theory of Change exercise that has preceded the process tracing may provide a useful starting point for the generation of hypotheses.

2. Establish a timeline: Understanding the chronology of events is an important first step in analysing causal processes.

Construct a causal graph: A causal graph visually depicts the causal process through which X causes Y and follows the timeline. It identifies the independent variable(s) of interest and provides structure to the process of enquiry by showing all the points at which the relevant actor (an individual, an organisation or a group) made a choice that could have affected the result.

3. Identify alternative choices or events: At each relevant moment in the causal graph, a different choice could have been made. These alternatives should be identified.

4. Identify counterfactual outcomes: Counterfactuals are vital to process tracing.

5. Find evidence for the primary hypothesis: There is no single type of data collection method specified for process tracing. Data collection should be designed to match the evidence specified in the hypotheses being tested. Data collection involves in-depth case study analysis and, thus, is likely to be predominantly qualitative, including historical reports, interviews and observations, but quantitative data may also be used.

6. Find evidence for rival hypotheses: The final step is to repeat step 6 for each alternative explanation.

Process tracing case study (PDF)

Process tracing briefing (PDF)

Useful resources

For a short overview of process tracing see:

Bennett, A. (2010) ‘Process Tracing and Causal Inference’ in Brady, H. and Collier, D. (Eds.) Rethinking Social Inquiry, Rowman and Littlefield.

For a practical guide to undertaking process tracing:

Ricks, J. I. and Liu, A. H., 2018. ‘Process-tracing research designs: a practical guide’. PS: Political Science & Politics, 51(4), 842–846.

Ricks and Lui have placed a number of worked examples online to accompany their 2018 article on process tracing. These follow the same steps set out in their article (PDF).

General elimination theory

General elimination theory

Overview

General Elimination Methodology (GEM) is a theory-driven qualitative evaluation method that improves our understanding of cause and effect relationships by systematically identifying and then ruling out causal explanations for an outcome of interest (Scriven 2008; White and Phillips 2012).

Often used as a post-hoc evaluation method, it supports a better understanding of complexity.

Also called the Modus Operandi Approach, GEM is compared to detective work, where a list of suspects are ruled out based on the presence or absence of motive, means and opportunity. In this respect, it describes an approach to thinking about causation that we all use, subconsciously, on a regular basis.

GEM has some similarities with process tracing, which could be seen as a more complex version of GEM and, therefore, GEM could be a useful introduction to some of the more complex small n methodologies.

What is involved?

GEM involves three primary steps (Scriven 2008; White and Phillips 2012):

Step 1: Establish a ‘List of Possible Causes’

First, the evaluators identify all the possible causes for the impact of interest. Secondly, they identify the necessary conditions for each possible cause and assess whether these conditions are present. This work can be based on secondary data analysis, such as a review of reports, articles, websites and other sources generally used to build a theory of change. The evaluators then need to identify rival explanations for the outcome of interest. This is generally achieved by engaging stakeholders in interviews or workshops.

Step 2: List the modus operandi for each cause

A modus operandi (MO) is a sequence of events or set of conditions that need to occur/be present for the cause to be effective. In investigative terms, detectives (i.e. the evaluators) set a list of means, motives and opportunity which are considered for each suspect (i.e. each cause). The list of MO helps evaluators decide whether certain conditions should be included or rejected.

Step 3: Assess each case against the evidence available

For each possible cause, the evaluator will consider the presence or absence of the factors identified in the modus operandi, and only keep those whose modus operandi is completely present.

General Elimination Theory case study (PDF)

General Elimination Theory briefing (PDF)

Useful resources

There is very little published material on General Elimination Methodology. The key paper in which GEM was first described in the terms set out here was:

Scriven, M. (2008) ‘A Summative Evaluation of RCT Methodology & An Alternative Approach to Causal Research’, Journal of Multi-Disciplinary Evaluation, 5(9) 11–24. Available here.

For an interesting discussion about causation between Scriven (the originator of GEM) and Cook (a proponent of ‘traditional’ models of counterfactual impact evaluation), see:

Cook, T.D., Scriven, M., Coryn, C. L. S., Evergreen, S. D. H (2010) ‘Contemporary Thinking About Causation in Evaluation: A Dialogue with Tom Cook and Michael Scriven’, American Journal of Evaluation, 31(1) 105–117. doi:10.1177/1098214009354918

Contribution analysis

Contribution analysis

Overview

As described by Mayne (2008, p. 1), ‘Contribution analysis explores attribution through assessing the contribution a programme is making to observed results.’

Four conditions are needed to infer causality in Contribution Analysis (Befani and Mayne 2014; Mayne 2008):

- Plausibility: The programme is based on a reasoned theory of change.

- Fidelity: The activities of the programme were implemented.

- Verified theory of change: The theory of change is verified by evidence such that the evaluator is confident that the chain of expected results occurred.

- Accounting for other influencing factors: Other factors influencing the programme were assessed and were either shown not to have made a significant contribution or, if they did, the relative contribution was recognised.

What is involved?

Mayne (2008) sets out six steps in Contribution Analysis.

Step 1: Set out the attribution problem to be addressed: It is important to determine the specific cause-effect question being addressed and the level of confidence required before exploring the type of contribution expected and assessing the plausibility of the expected contribution in relation to the size of the programme.

Step 2: Develop the theory of change and the risks to it: Contribution Analysis is based on a well-developed theory of change that specifies the results chain that links the programme to outcomes.

Step 3: Gather existing evidence on the theory of change: The evaluator should next gather evidence to assess the logic of the links in the Theory of Change. Evidence will cover programme results and activities as well as underlying assumptions and other influencing factors.

Step 4: Assemble and assess the contribution story and challenges to it: The contribution story can now be assembled and assessed critically. This will involve examining links in the results chain and assessing which of these are strong and which are weak, assessing the overall credibility of the contribution story and ascertaining whether stakeholders agree with the story.

Step 5: Seek out additional evidence: Based on the assessment of how robust the contribution story is, the evaluator should next identify the new data needed to address challenges to the credibility of the story. At this stage, it may be useful to update the theory of change or look at certain elements of the theory in more detail. If it is possible to verify or confirm the theory of change with empirical evidence, then it is reasonable to conclude that the intervention in question was a contributory cause for the outcome (Befani and Mayne 2014).

Step 6: Revise and strengthen the contribution story: Contribution Analysis works best as an iterative process and should, ideally, be seen as an ongoing process that incorporates new evidence as it emerges.

City College Norwich Contribution Analysis case study (PDF)

Contribution Analysis briefing

Useful resources

An interesting lecture featuring John Mayne talking about Contribution Analysis (19 minutes in) and starting with a worked example (3 mins 25 seconds in) can be found on YouTube.

Key reading

The originator of Contribution Analysis, John Mayne, has published several articles. The most commonly cited one, setting out the key elements of Contribution Analysis in a concise and accessible form, is:

Mayne, J. (2008) Contribution Analysis: An approach to exploring cause and effect. Brief 16, Institutional Learning and Change (ILAC) Initiative.

A few years later, Mayne took stock of developments in Contribution Analysis and wrote another, widely cited, article on the topic:

Mayne, J. (2012) ‘Contribution analysis: Coming of age?’ Evaluation, 18(3) 270–280. https://doi.org/10.1177/1356389012451663

More recently, he has again revisited Contribution Analysis:

Mayne, J. (2019) ‘Revisiting Contribution Analysis’, Canadian Journal of Program Evaluation, 34(2) 171–191.

Most significant change (Transformative evaluation)

Most significant change (Transformative evaluation)

Overview

The Most Significant Change (MSC) technique is a participatory, story-based method involving the collection and selection of significant change stories that have occurred in the field. Stories are usually elicited directly from programme participants. These stories are then passed upwards in the organisational hierarchy to panels of stakeholders who assess their significance, discuss how they relate to the wider implications of the changes and review the available evidence that supports them. This process helps reduce the number of stories to those identified as being the most significant by the majority of stakeholders.

While MSC was originally designed as an impact monitoring – rather than an evaluation – approach, it has since been adapted for use in impact evaluation by expanding the scale of story collection and the range of stakeholders involved. As an impact method, it is vulnerable to selection and social desirability bias. For these reasons, it is not typically used alone for impact evaluations, but usually precedes or complements summative evaluation and may best be used alongside more rigorous approaches, such as Contribution Analysis or Process Tracing, when tackling causal inference.

What is involved?

A detailed guide provided by Davies and Dart (2005) includes 10 steps, of which Steps 4, 5 and 6 are deemed fundamental while the remainder are discretionary.

- How to start and raise interest: Evaluators should be clear about the purpose of using MSC within the organisation. It may be useful to identify people excited by MSC who could act as catalysts in the process.

- Establishing ‘domains of change’: Domains of change are fuzzy categories defined to guide the significant change stories sought.

- Defining the reporting period: The frequency of collecting stories varies. Higher frequency reporting allows people to integrate the process more quickly but increases the cost of the process and risks the participants running out of identifiable SC stories.

- Collecting stories of change: Significant Change Stories can be captured in different ways including interviews and group sessions. Stories should be recorded as they are told and should be short and comprehensible to all stakeholders.

- Reviewing the stories within the organisational hierarchy: Storytellers discuss their stories and identify and submit the most significant ones to a level above. The same process is run at mid-levels, with stories selected and submitted to the next level. This process allows widely valued stories to be distinguished from those with only local importance. Depending on the evaluation aims and the scale of the project, the different levels may involve beneficiaries, field workers, managers, donors and investors.

- Providing stakeholders with regular feedback about the review process: Results must be fed back to storytellers.

- Implementing a process to verify the stories if necessary: Verification of the accounts can be beneficial.

- Quantification: While MSC is essentially qualitative, the quantification of surrounding information may be useful.

- Conducting secondary analysis and meta-monitoring: It may be useful to classify and examine the topics identified in SC stories using thematic coding.

- Revising the MSC process: MSC should not be used in an unreflective way; rather, the implementation should be adapted throughout the process.

Case study: Plymouth Marjon University MSC(PDF)

Briefing: Most Significant Change (PDF)

Useful resources

A detailed description of the methodology and its application is available here:

Davies, R., and Dart, J. (2005) The ‘most significant change’ (MSC) technique. A guide to its use.

Qualitative comparative analysis (QCA)

Qualitative comparative analysis (QCA)

Overview

Qualitative Comparative Analysis (QCA) is a ‘synthetic strategy’ (Ragin, 1987, p. 84) that allows for multiple conjunctural causation across observed cases. QCA analysis recognises that multiple causal pathways can lead to the same result and that each pathway consists of a combination of conditions (i.e. they are conjunctural). The method draws on the assumption that it is often a combination of multiple causes that has causal power (Befani, 2016). Furthermore, the same cause can have different effects depending on the other causes it is combined with and, thus, lead to different outcomes.

In QCA, each case is changed into a series of features, including several condition variables and one outcome variable. The method generally starts with a theory of change identifying the ‘conditions’ (factors) that may contribute to the anticipated outcomes. QCA analysis is an iterative process that requires in-depth knowledge of specific cases.

There are three main techniques: crisp set (csQCA), fuzzy set (fzQCA) and multi-value (mvQCA). They differ in how they code and consider membership of the cases. In csQCA, membership is dichotomous (e.g. 1 = member, 0 = non-member). However, this dichotomous nature is not always adapted to real-life situations. Therefore, fsQCA was developed in response to this limitation to assign gradual values to conditions, such as quality or satisfaction, and allow for variance in observations. In fsQCA and mvQCA, membership is multichotomous and partial (e.g. 1 = full member, 0.8 = strong but not full member, 0.3 = weak member, 0 = non-member). Here, we will focus on csQCA as a good introduction to the methodology. The logic underpinning the technique is then extended to fsQCA and mvQCA.

What is involved?

Rihoux and De Meur (2009) identify six steps for csQCA:

Step 1 Building a dichotomous data table: Drawing on the theory of change, data is coded for each condition and outcome dichotomously (e.g. 1 = member, 2 = non-member).

Step 2 Constructing a Truth Table: Using software, a first ‘synthesis’ of the raw data is produced in what is called a truth table. This is a table of configurations (i.e. a number of combinations of conditions associated with a given outcome).

Step 3: Resolving Contradictory Configurations: Contradictory configurations are a normal part of QCA. This is when the reiterative dialogue between data and theory occurs. The evaluators need to resolve these contradictions by using their knowledge of the cases and reconsidering their theoretical perspective in order to obtain more coherent data.

Step 4: Boolean Minimisation: This step is generally completed using software and a synthesis of the truth table. It identifies conditions that are either present or absent in configurations leading to the same outcome.

Step 5: Bringing in the ‘Logical Remainders’ Cases: Logical remainders are a pool of potential cases that can be used to produce a shorter (i.e. more parsimonious) causal explanation.

Step 6: Consistency and coverage: When running a Boolean minimisation, specialist software can calculate consistency and coverage for each configuration and the solution as a whole.

Case study: University of Leeds QCA (PDF)

Briefing: QCA

Useful resources

Compass is a website that specialises in QCA, listing events and providing extensive resources including a very comprehensive bibliography.

Ragin’s (2017) User’s Guide to fzQCA is a good starting point for fzQCA.

A good introduction to QCA is:

Befani, B. (2016) Pathways to change: Evaluating development interventions with Qualitative Comparative Analysis (QCA). Sztokholm: Expertgruppen för biståndsanalys (the Expert Group for Development Analysis). Available here.

Comparative case study

Comparative case study

Overview

A comparative case study (CCS) is defined as ‘the systematic comparison of two or more data points (“cases”) obtained through use of the case study method’ (Kaarbo and Beasley 1999, p. 372). A case may be a participant, an intervention site, a programme or a policy. Case studies have a long history in the social sciences, yet for a long time, they were treated with scepticism (Harrison et al. 2017). The advent of grounded theory in the 1960s led to a revival in the use of case-based approaches. From the early 1980s, the increase in case study research in the field of political sciences led to the integration of formal, statistical and narrative methods, as well as the use of empirical case selection and causal inference (George and Bennett 2005), which contributed to its methodological advancement. Now, as Harrison and colleagues (2017) note, CCS:

“Has grown in sophistication and is viewed as a valid form of inquiry to explore a broad scope of complex issues, particularly when human behavior and social interactions are central to understanding topics of interest.”

It is claimed that CCS can be applied to detect causal attribution and contribution when the use of a comparison or control group is not feasible (or not preferred). Comparing cases enables evaluators to tackle causal inference through assessing regularity (patterns) and/or by excluding other plausible explanations. In practical terms, CCS involves proposing, analysing and synthesising patterns (similarities and differences) across cases that share common objectives.

What is involved?

Goodrick (2014) outlines the steps to be taken in undertaking CCS.

Key evaluation questions and the purpose of the evaluation: The evaluator should explicitly articulate the adequacy and purpose of using CCS (guided by the evaluation questions) and define the primary interests. Formulating key evaluation questions allows the selection of appropriate cases to be used in the analysis.

Propositions based on the theory of change: Theories and hypotheses that are to be explored should be derived from the theory of change (or, alternatively, from previous research around the initiative, existing policy or programme documentation).

Case selection: Advocates for CCS approaches claim an important distinction between case-oriented small n studies and (most typically large n) statistical/variable-focused approaches in terms of the process of selecting cases: in case-based methods, selection is iterative and cannot rely on convenience and accessibility. ‘Initial’ cases should be identified in advance, but case selection may continue as evidence is gathered. Various case-selection criteria can be identified depending on the analytic purpose (Vogt et al., 2011). These may include:

- Very similar cases

- Very different cases

- Typical or representative cases

- Extreme or unusual cases

- Deviant or unexpected cases

- Influential or emblematic cases

Identify how evidence will be collected, analysed and synthesised: CCS often applies mixed methods.

Test alternative explanations for outcomes: Following the identification of patterns and relationships, the evaluator may wish to test the established propositions in a follow-up exploratory phase. Approaches applied here may involve triangulation, selecting contradicting cases or using an analytical approach such as Qualitative Comparative Analysis (QCA).

Comparative Case Study: Impact evaluations with small cohorts: Methodology guidance (PDF)

Briefing: Comparative Case Study (PDF)

Useful resources

A webinar shared by Better Evaluation with an overview of using CCS for evaluation.

A short overview describing how to apply CCS for evaluation:

Goodrick, D. (2014). Comparative Case Studies, Methodological Briefs: Impact Evaluation 9, UNICEF Office of Research, Florence.

An extensively used book that provides a comprehensive critical examination of case-based methods:

Byrne, D. and Ragin, C. C. (2009). The Sage handbook of case-based methods. Sage Publications.

Agent-based modelling

Agent-based modelling

Overview

Social sciences often aspire to move beyond exploring individual behaviours and seek to understand how the interaction between individuals leads to large-scale outcomes. In this process, an understanding of complex systems requires more than understanding its parts. Agent-based modelling (ABM) is a bottom-up modelling approach in which macro-level system behaviour is modelled through the behaviours of micro-level autonomous, interacting agents. ABM can generate deep quantitative and qualitative insights into complex socio-economic, natural and man-made systems through simulating the interactions of processes, diversity and behaviours on different scales. Social interventions are generally designed to influence micro-level behaviour (e.g. the behaviour of an individual or household). Similarly, evaluations are generally interested in explaining micro-level behavioural changes. ABM, in contrast, allows macro-level mechanisms to be represented, making it well-suited to evaluate complex programmes and policies, for example, the evaluation of multi-intervention outreach programmes.

Recent ABM applications have been made possible by advances in the development of specialised agent-based modelling software, more granular and larger data sets and advancements in computer performance (Macal and North 2010). However, ABM is a complex and resource-intensive method: the implementation process requires expert modellers, the results can be difficult to understand and communicate, and the application of ABM can be costly.

What is involved?

The underlying premise of ABM is its ‘complex system-thinking’. That is, the complex world is comprised of numerous interrelated individuals, whose interactions create higher-level features. In ABM, such emergent phenomena are generated from the bottom up (Bonabeau 2002) by seeking to identify the underlying rules that govern the behaviours of whole systems. ABM presumes that simple rules behind individual actions can lead to coherent group behaviour, and that even a small change in these rules can radically change group behaviour.

A typical ABM has three main elements:

- Agents: Agents are autonomous. They are ‘active, initiating their actions to achieve their internal goals, rather than merely passive, reactively responding to other agents and the environment’ (Macal and North 2010, p. 153).

- The relationships between agents: ABM is concerned with two main questions: who interacts with whom (as agents only connect to a subset of agents – termed neighbours), and how these neighbours are connected (the topology of connectedness).

- Environments: Information about the environment in which agents interact may be needed beyond mere spatial location: while interacting with their environment, agents are constrained in their actions by the infrastructure, resources, capacities or links that the environment can provide.

These elements need to be identified, modelled and programmed to create an ABM.

Agent-based modelling case study: Impact evaluation with small cohorts: Methodology guidance (PDF)

Briefing: Agent-based modelling (PDF)

Useful resources

Axelrod and Tesfatsion’s On-Line Guide for Newcomers to ABM provides a good introduction to ABM.

An introductory tutorial into the background and application of ABM:

Macal, C. and North, M. (2014) Introductory tutorial: Agent-based modeling and simulation. In Proceedings of the Winter Simulation Conference 2014, pp. 6–20. IEEE.

A widely read book that provides a simple overview of the methodology, including how to construct simple ABM:

Gilbert, N. and Troitzsch, K. (2005) Simulation for the Social Scientist, McGraw-Hill.

-

Guidance and resources

-

Report

Report | Learning about evaluation with small cohorts

10 October 2024 -

Project

-

Report